The traditional barriers to entering the high-end video production market have historically centered on the massive capital required for specialized hardware and the deep technical knowledge needed to master complex visual effects software. Many small-scale creators and marketing departments find themselves trapped in a cycle of high costs and slow delivery times, unable to iterate quickly enough to meet the demands of modern social algorithms. This production bottleneck is being dismantled by the emergence of Seedance 2.0, a sophisticated generative system that shifts the focus from manual frame-by-frame manipulation to high-level directorial intent. By leveraging advanced diffusion transformer technology, this tool allows for the rapid creation of professional-grade assets that were previously the exclusive domain of large animation studios.

The agitation in the creative industry stems from the reality that visual quality is no longer just a luxury but a fundamental requirement for audience engagement. In an era where 4K and 1080p are the baseline expectations, content that looks amateurish or exhibits technical glitches is quickly discarded. Storytellers often find themselves compromising their vision due to the sheer complexity of rendering realistic motion or maintaining character consistency across different shots. The solution lies in adopting a workflow that treats the AI as a highly capable production assistant, one that can interpret a director’s vision and execute it with mathematical precision. By integrating visual and auditory data into a single coherent output, the process moves from a fragmented series of tasks to a unified creative act.

Bridging the Gap between Conceptual Storyboards and Final Rendered Footage

In my professional observation, the most significant change brought about by modern generative models is the collapse of the pre-production and production phases. Traditionally, moving from a storyboard to a finished scene involved a long chain of animators, lighters, and compositors. Current systems allow a single individual to act as the architect of the entire scene. The ability to describe a complex environment and have the system generate it in 1080p resolution changes the economic equation of content creation. It enables a level of experimentation that was previously impossible, as the cost of “re-shooting” a scene is reduced to the time it takes to refine a text prompt.

The realism observed in recent outputs is largely due to the improved understanding of lighting and texture. Unlike older generative tools that often produced flat or plastic-looking surfaces, the current architecture handles light interaction—such as reflections on water or the way light filters through trees—with a much higher degree of authenticity. In my tests, the resulting footage often requires minimal color grading, as the AI already applies a cinematic aesthetic based on the stylistic cues provided in the prompt. This level of initial quality allows for a more direct transition from generation to final distribution.

Advanced Motion Control and Physics Simulation for Natural Character Interaction

A major technical breakthrough in this generation of AI is the way it simulates physical interactions. Movement is no longer a simple animation of pixels but is grounded in a spatial-temporal model that understands weight and resistance. When a character interacts with an object, the resulting motion feels deliberate and weighted. For example, if a prompt describes a character walking through a heavy wind, the model simulates the resistance in the character’s posture and the corresponding movement in their clothing. This attention to detail is what separates a professional cinematic experience from a basic animation.

Maintaining Visual Coherence during Complex Multi Shot Scene Transitions

Consistency remains the holy grail of AI-assisted filmmaking. The current model excels at maintaining the identity of subjects even as the camera moves or the scene transitions. By utilizing a shared latent space for all shots within a sequence, the system ensures that facial features, proportions, and attire do not shift unexpectedly. In my experience, this capability is particularly effective for multi-shot narratives where a character must be seen from different angles. The AI maintains a “mental model” of the subject, ensuring that the character at the start of a 60-second clip is visually identical to the character at the end.

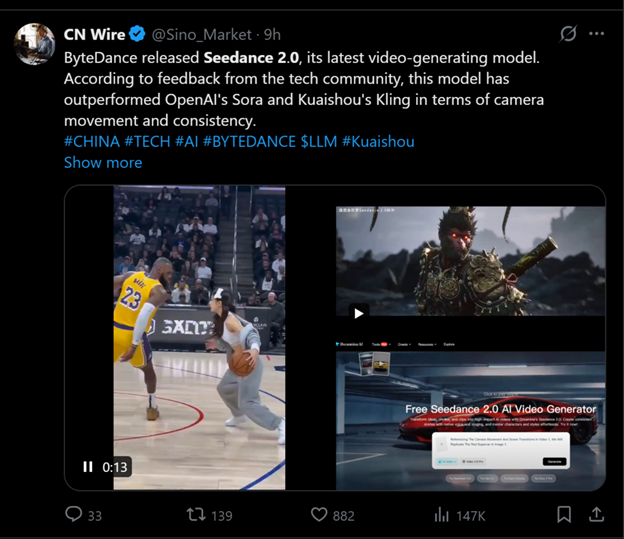

Evaluating Production Efficiencies across Contemporary Generative AI Video Platforms

To better understand the shift in production value, it is helpful to compare the capabilities of advanced generative systems against the standard benchmarks of the previous year. The following table highlights the technical improvements that facilitate a more professional workflow.

| Production Factor | Standard Generative Baseline | Seedance 2.0 Advanced Workflow |

| Resolution Standards | 720p with frequent artifacts | 1080p Ultra High Definition |

| Audio Synchronization | External sound design required | Integrated environmental soundscapes |

| Temporal Stability | Clip fragmentation after 5s | Stable narrative flow up to 60s |

| Physics Engine | Basic linear motion | Complex spatial-temporal physics |

| Prompt Interpretation | Basic object identification | Director-level cinematic cues |

| Workflow Integration | Isolated clip generation | Production-ready MP4 exports |

This comparison illustrates a move toward a more comprehensive production environment. The inclusion of native audio synthesis is a particularly notable efficiency. By generating environmental sounds—such as the hum of city traffic or the sound of footsteps—at the same time as the video, the system eliminates a significant portion of the sound editing phase. While the lip-sync capabilities are still in an early stage compared to specialized audio models, the integration of basic speech alignment provides a solid foundation for further refinement in professional editing software.

Operational Guidelines for Transforming Scripted Prompts into 1080p Masterpieces

The operational flow for generating high-quality video content is designed to be accessible while offering deep control for advanced users. The following steps outline the official process for creating assets.

Step 1: Visual and Narrative Prompting

The user provides the initial creative input through a detailed text prompt or by uploading a reference image. This is the stage where the director defines the scene’s mood, lighting, and character actions. Precise language regarding the camera type and lens—such as an 85mm portrait shot—helps the AI narrow down the visual style.

Step 2: Technical Configuration and Scaling

Once the concept is defined, the user configures the technical specifications. This includes selecting the aspect ratio that fits the target platform and choosing the final resolution. Users can opt for lower resolutions for initial testing and then scale up to 1080p for the final production.

Step 3: Multimodal Generation and Audio Synthesis

The system begins the processing phase, which involves generating both the visual frames and the synchronized audio. The AI works through a two-stage process: first creating a core visual structure and then adding the fine textures and environmental sounds that give the scene its cinematic depth.

Step 4: Review and Production Export

The final step is the review of the generated sequence. If the motion and lighting meet the production standards, the video is exported as a watermark-free MP4 file. This file is ready for immediate integration into larger projects or for direct publication to digital channels.

Understanding Technological Boundaries and Strategic Approaches to Iterative Generation

Despite the rapid advancements, it is crucial for professionals to understand the current limitations of generative video technology. AI is an iterative tool, and the first result is not always the final one. In my testing, I have found that highly specific interactions between multiple characters can sometimes lead to unexpected visual overlaps or minor inconsistencies in the background. Furthermore, the accuracy of the output is heavily reliant on the clarity of the input; vague prompts often result in generic visuals that may not align with a specific brand identity.

Successful creators approach this tool with an iterative mindset. Rather than expecting a perfect 60-second masterpiece on the first attempt, the most effective strategy involves generating several short variations to test different lighting and motion styles. Once a successful visual language is established, it can be scaled and extended into longer narrative sequences. This approach acknowledges that while the AI handles the heavy lifting of rendering and physics, the human director remains the ultimate arbiter of taste and narrative coherence.

The democratization of visual effects through tools like Seedance 2.0 does not replace the need for creative talent; rather, it amplifies it. By removing the technical hurdles that once stopped great ideas from reaching the screen, the industry is entering an era where the quality of the story is the only real limit. As we look toward the future, the ability to rapidly prototype and produce high-fidelity video will become a standard skill for all digital storytellers, leading to a more diverse and vibrant media landscape.