In the rush to release features, modern development teams have embraced AI coding assistants with open arms. It is easy to see why: tools that auto-complete functions and generate boilerplate code can increase velocity by 40% or more. But in the world of cybersecurity, speed often comes at the expense of safety.

Security researchers are now uncovering a disturbing trend. AI models are not just writing code; they are unintentionally introducing “Trojan Horses” into enterprise applications. From hallucinated software dependencies that hackers can exploit, to regurgitating outdated, vulnerable encryption methods, the risks are real and growing.

For any organization that values data security and legal compliance, blind trust is no longer an option. Implementing a rigorous scanning protocol using a privacy-first ai code detector is the only way to insulate your software supply chain from these emerging threats.

In this article, we will expose the specific security flaws inherent in Large Language Models (LLMs) and how Decopy.ai provides the defensive layer your DevSecOps workflow is missing.

The Danger of “Hallucinated Packages”

One of the most bizarre and dangerous phenomena in AI coding is the “Hallucinated Package.”

LLMs do not understand the software ecosystem; they simply predict words. Occasionally, when an AI is asked to solve a specific problem, it will confidently recommend importing a library that does not exist. It invents a name that sounds plausible, like fast-json-parser-v2.

Here is the nightmare scenario:

- A developer prompts an AI for a solution.

- The AI suggests code importing a non-existent package.

- The developer copies and pastes it, assuming the library is real but they just haven’t installed it yet.

- The Attack: Bad actors monitor these common AI hallucinations. They register the package name on public repositories like npm or PyPI and upload malicious code.

- When the developer runs npm install, they are not installing a utility; they are installing a backdoor into your company’s network.

This is known as a “Software Supply Chain Attack.” To prevent this, you need to know when code was generated by an AI so you can scrutinize every dependency it imports. If Decopy.ai flags a block of import statements as machine-generated, it is an immediate red flag to manually verify that every single library listed is legitimate and secure.

The “Zombie Vulnerability” Problem

AI models are trained on massive datasets of code scraped from the internet. This includes code from 2015, 2018, and 2020. Much of this code contains vulnerabilities that have since been discovered and patched—but the AI doesn’t know that.

An AI might generate a user authentication function that uses MD5 hashing (which is now considered broken) simply because MD5 appears frequently in its older training data. It might write a SQL query that lacks proper parameterization, leaving you open to SQL Injection attacks.

Human developers, lulled into a false sense of security by the AI’s confident syntax, often skim over these flaws.

How Detection Mitigates Risk:

By using Decopy.ai, you can segregate code based on origin.

- Human Code: Review for logic errors.

- AI Code: Review specifically for security errors and outdated patterns.

Knowing the source changes the lens through which you review the code. A high AI probability score tells your security team: “Do not trust this code. Check it against the latest CVE (Common Vulnerabilities and Exposures) database.”

The Legal Trap: Open Source License Laundering

Beyond security, there is a massive legal risk: License Contamination.

AI models are trained on open-source code, including code licensed under restrictive licenses like the GPL (General Public License). The GPL is a “viral” license; if you include a snippet of GPL code in your proprietary application, you may be legally required to release your entire source code to the public.

Microsoft, Google, and OpenAI are currently facing lawsuits regarding this exact issue. If an AI regurgitates a distinct sorting algorithm from a GPL-licensed project and your developer pastes it into your commercial product, you are walking into a lawsuit.

Because AI strips away the license headers and comments, you have no way of knowing where the code came from. However, you can know if it was AI-generated.

If you use our tool to detect ai code, you identify the risk zones. If a complex algorithm is flagged as AI-generated, a prudent legal team will require that the code be rewritten by a human engineer to ensure a “clean room” implementation, free from potential copyright infringement.

Decopy.ai: The Security-First Architecture

We understand that you cannot paste sensitive, proprietary code into a web tool that might steal it. That would defeat the purpose of security.

This is why Decopy.ai is the preferred choice for security-conscious organizations:

- Stateless Processing: We do not store your code. Once the analysis is done (in milliseconds), the data evaporates.

- Client-Side Privacy: We do not require you to create a user profile that links your IP address to specific code snippets.

- No Training Loop: Unlike some “free” tools, we do not use your submissions to make our AI smarter. Your intellectual property remains yours.

A New Protocol for DevSecOps

To survive in an AI-saturated world, development teams need to update their protocols. We advocate for a “Trust-Zero” approach to generated content.

1. The “Quarantine” Phase

Treat AI-generated code like untrusted input. It should not be merged directly into the main branch. Developers should be encouraged to use AI for brainstorming, but the final commit should be verified.

2. The Verification Gate

Before a Pull Request is approved, run the diff through Decopy.ai.

- If the diff is 100% AI-generated, reject the PR. Ask the developer to refactor the code, verify dependencies, and ensure current security standards are met.

- This adds a “Human in the Loop” checkpoint that prevents lazy, vulnerable code from accumulating in your repository.

3. The Audit Trail

For agency owners and software houses, clients are starting to demand “AI Transparency Reports.” They want to know how much of their software was written by machines. Using Decopy allows you to spot-check your team’s work and ensure you are delivering the human expertise the client paid for.

Why Syntax Analysis Matters for Security

You might ask, “Why can’t I just look at the code?”

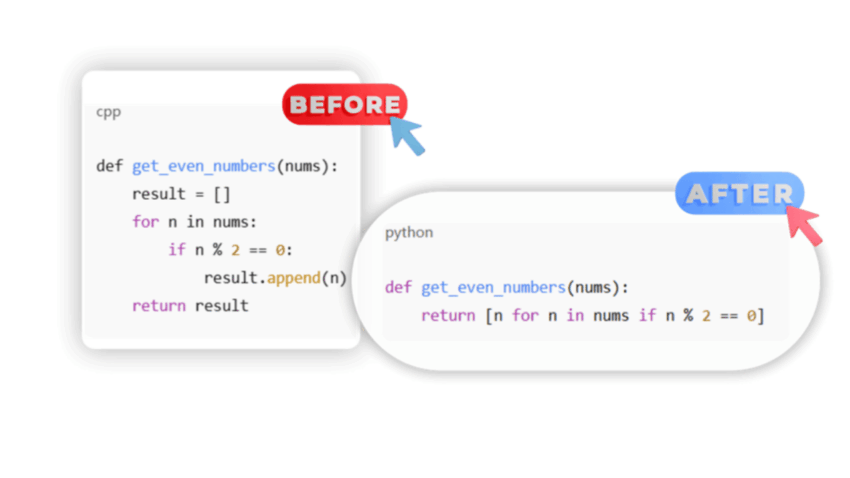

The problem is that AI code looks perfect. It is often cleaner and better formatted than human code. This superficial beauty hides the structural rot underneath.

Decopy.ai analyzes the syntax probability. AI models are statistical engines; they choose the most probable code structure. This makes their output “low perplexity.” Human code is “high perplexity”—it contains idiosyncratic choices, creative workarounds, and non-standard optimizations.

From a security perspective, “predictable” often means “exploitable.” Hackers use AI too. They know exactly how AI writes code. If your application is built entirely on standard, predictable AI patterns, it is easier for attackers to predict your system’s internal logic and find weaknesses. Introducing human “chaos” and creativity into your code actually creates a moving target that is harder to hack.

Conclusion: Defense in Depth

The era of AI coding is here, and it is not going away. It offers incredible benefits for productivity. But as every security professional knows, there is no such thing as a free lunch. The cost of AI speed is the potential for new, invisible risks.

Your codebase is your company’s most valuable asset. Do not let it become compromised by hallucinated dependencies, outdated security practices, or accidental copyright infringement.

Adopt a posture of active defense. Use Decopy.ai to verify the integrity of your software. By distinguishing between human ingenuity and machine mimicry, you can ensure that your application is not just fast to build, but safe to deploy. Detect ai code before the hackers do, and build your future on a foundation of trust.