Computer vision and machine learning systems are increasingly present in everyday applications. From facial recognition and medical imaging to autonomous vehicles and industrial inspection, these technologies rely on the ability to interpret visual data accurately. While advances in model architectures and computing power have accelerated progress, one foundational element continues to determine system performance: data annotation.

Data annotation plays a critical role in transforming raw visual data into structured information that machine learning models can understand. Without well-annotated datasets, even the most advanced algorithms struggle to learn meaningful patterns. As computer vision applications move from research environments into real-world deployment, the importance of robust annotation processes becomes increasingly clear.

Why raw visual data is not enough

Images and videos contain vast amounts of information, but they are unstructured by nature. Pixels alone do not convey meaning to a machine learning model. For a system to recognize objects, detect anomalies, or understand scenes, it must be trained using examples where the desired outputs are clearly defined.

Data annotation provides this structure by associating visual elements with labels, categories, or spatial boundaries. These annotations serve as the ground truth that guides the learning process. When annotations are inaccurate or inconsistent, models learn incorrect relationships that degrade performance during deployment.

In computer vision, the quality of annotations often has a greater impact on results than incremental changes in model architecture. Well-labeled data enables simpler models to outperform complex ones trained on poor-quality datasets.

The different forms of annotation in computer vision

Computer vision tasks vary widely, and so do annotation methods. The type of annotation required depends on the problem being solved and the level of detail needed by the model.

Image classification

Image classification assigns a single label to an entire image. This approach is commonly used when identifying the presence of an object or category without needing precise localization. While classification is relatively simple compared to other techniques, it still requires consistent labeling rules to avoid ambiguity.

Object detection

Object detection identifies and localizes multiple objects within an image, typically using bounding boxes. This method is widely used in applications such as traffic analysis, retail analytics, and surveillance. Accurate bounding box placement and consistent object definitions are essential for reliable detection performance.

Semantic and instance segmentation

Segmentation assigns labels at the pixel level, allowing models to understand object boundaries and spatial relationships in detail. Semantic segmentation labels each pixel by class, while instance segmentation distinguishes between individual objects of the same class. These approaches are critical in fields such as medical imaging, autonomous driving, and satellite imagery analysis.

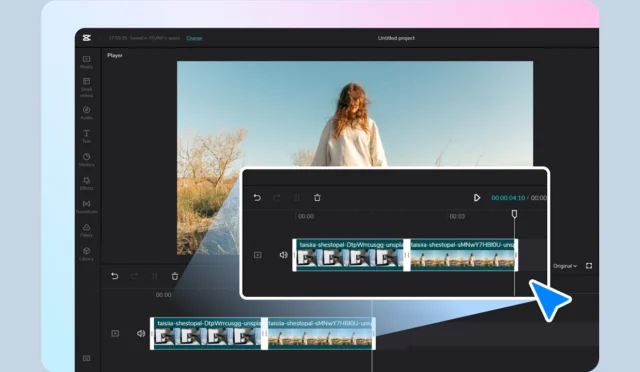

Video annotation

Video-based models require temporal consistency across frames. Video annotation may involve tracking objects over time, labeling actions, or identifying events. Maintaining consistency across thousands of frames adds significant complexity to annotation workflows.

How annotation quality affects machine learning outcomes

Machine learning models learn statistical patterns from annotated examples. When annotations are noisy or inconsistent, models internalize those inconsistencies, leading to unstable or biased predictions.

Common annotation-related issues include:

- Inconsistent label definitions across datasets

- Ambiguous handling of edge cases

- Inaccurate object boundaries or missing labels

- Class imbalance caused by underrepresented scenarios

These issues can significantly reduce a model’s ability to generalize to new data. In production environments, this often results in unpredictable behavior and increased error rates.

The importance of consistency over time

As datasets grow, maintaining annotation consistency becomes more challenging. New annotators, evolving guidelines, or changes in project scope can introduce variation. Without strong quality control and documentation, datasets accumulate hidden inconsistencies that are difficult to correct later.

Consistent annotation practices ensure that models learn stable patterns and behave predictably when exposed to new data.

Data annotation as a scalable process

In early-stage projects, annotation is often handled manually by small teams. While this approach may work for prototypes, it quickly becomes a bottleneck as data volumes increase. Scaling computer vision systems requires annotation workflows that are designed for volume, quality, and repeatability.

Annotation guidelines and standards

Clear annotation guidelines are essential for scaling. They define how objects should be labeled, how to handle ambiguous cases, and how to maintain consistency across datasets. Well-documented standards reduce subjective interpretation and improve inter-annotator agreement.

Quality control and review

Quality assurance is a critical component of scalable annotation. This may include peer reviews, spot checks, automated validation rules, and performance metrics for annotators. Continuous quality monitoring helps identify systematic errors before they affect model training.

Iterative dataset improvement

Machine learning models often reveal weaknesses in datasets. Poor performance in specific scenarios may indicate missing examples or inconsistent labels. Iterative annotation workflows allow teams to refine datasets based on model feedback, improving performance over time.

The connection between annotation and model generalization

Generalization refers to a model’s ability to perform well on unseen data. High-quality annotation directly supports generalization by providing clear, representative examples of real-world conditions.

In computer vision, this includes variation in lighting, perspective, object appearance, and environmental context. Annotated datasets that capture this diversity enable models to adapt to new situations without excessive retraining.

Conversely, datasets that are narrowly focused or inconsistently labeled lead to brittle models that fail outside controlled environments.

Industry applications depend on reliable annotation

Many real-world applications of computer vision depend on precise annotation. In healthcare, segmentation accuracy can influence diagnostic outcomes. In autonomous systems, object detection reliability affects safety. In industrial inspection, annotation quality determines defect detection rates.

As these systems move into regulated or safety-critical domains, annotation quality becomes a matter of compliance and risk management. Organizations must be able to explain how datasets were labeled, validated, and maintained over time.

Specialized providers such as DataVLab support computer vision teams by delivering structured, high-quality annotated datasets tailored to machine learning and visual AI applications. By combining scalable workflows with rigorous quality control, such approaches help organizations deploy reliable models at scale.

Data annotation as a long-term investment

Annotation is often viewed as a one-time cost associated with model training. In practice, it is an ongoing investment. As environments change, new data must be annotated and existing datasets updated. Treating annotation as a continuous process helps organizations adapt to evolving requirements.

Teams that plan for long-term data maintenance avoid costly rework and reduce technical debt. This approach enables sustained performance improvements as models and applications evolve.

Conclusion: annotation underpins effective computer vision systems

Computer vision and machine learning systems rely on data annotation to bridge the gap between raw visual data and meaningful predictions. While advances in algorithms and hardware continue to drive innovation, annotation quality remains a decisive factor in real-world performance.

By investing in structured annotation processes, clear standards, and quality control, organizations can build models that generalize reliably and scale with confidence. Data annotation is not a peripheral task. It is the foundation upon which effective computer vision systems are built.